Azure Hub Spoke Virtual Network Design Best Practices

- Brett Borschel

- Jan 12, 2022

- 4 min read

Updated: May 4, 2022

With all of the recent changes from Microsoft to their Azure network architecture, you may wondering what does a "best practices" enterprise hub and spoke cloud architecture even look like?"

After spending a lifetime working in traditional zone-based firewall data center design, the Azure cloud's network topology always felt wrong to me. It was all too simple. Their reliance on layer 4 NSGs to secure critical resources felt mickey mouse and the routing tables to firewalls were mostly static. Where is the traffic inspection? Where is the dynamic routing?

The distributed cloud model of "per vnet" micro-services proved hard to manage and drove up costs. I have seen companies with a half dozen Azure firewalls in their spokes that could have consolidated into a single firewall using a properly designed Hub-Spoke topology.

It appears that Microsoft has been listening and have introduced some new services and upgrades to help solve these problems. A recent update to the native Azure firewall is allowing placement of UDRs in the firewallsubnet to help move the firewall to the center of the cloud network and NVA firewall deployments have gotten better and better with the roll-out of the Azure Route Server.

When we look further into how we segment our spoke virtual networks using these routing features to place our NGFW into the center of our network topology we can finally see the end game in clear sight. A true "zone based" cloud network topology!

What resources should I put in the hub?

At a minimum, the enterprise hub virtual network should be a relatively small network containing the following resources:

A pair of 3rd party Network Virtual Appliance (NVA) firewalls

A pair of 3rd party Network Virtual Appliance load balancers

Azure ExpressRoute Gateway (VNG)

Azure Route Server (ARS)

Two public Azure Load Balancers (ALB) for the Network Virtual Appliances

Two Azure Public IPs (PIP)

One Azure Internal Load Balancer (ILB)

How large should the hub subnet be?

I will get into more detail about IP addressing plans for the enterprise cloud in my next article, but for now we will concentrate on our companies primary production HUB network.

This is a relatively small network space with enough room for around 8 subnets and a little room for growth. I've never been a big fan of doing CIDR math for small subnets because I think it allows for simple mistakes and if we have a good IP addressing plan we won't run short of available IP space. As such I like to keep my subnets on class-full address boundaries. In this scenario a /20 hub network will allow for 16 class-full /24 subnets.

What subnets will I need?

EnterpriseHubWest-Vnet - 10.100.0.0/20

Firewall-Untrust Subnet - 10.100.1.0/24

Firewall-Trusted Subnet - 10.100.2.0/24

LoadBalancer-Untrust Subnet - 10.100.3.0/24

LoadBalancer-Trusted Subnet - 10.100.4.0/24

RouteServerSubnet Subnet - 10.100.5.0/24

GatewaySubnet Subnet - 10.100.6.0/24

NetworkManagement Subnet - 10.100.15.0/24

What is the purpose of the Azure Route Server?

Azure Route Server is the glue that holds together the routing tables being learned from the ExpressRoute to on-prem, the native Azure spoke virtual network routes, the default route, and any site-to-site VPN routes advertised from the NVA firewalls. ARS uses BGP to communicate these routes so it is very predictable and stable.

If two or more routes are programmed in the Azure virtual machines, they will use Equal Cost MultiPathing (ECMP) to choose one of the NVA instances for every traffic flow. As a consequence, SNAT is a must in this design if traffic symmetry is a requirement.

ARS is capable of supporting a maximum of 8 BGP neighbors so if we are using going to scale out our NVAs for additional capacity we will have a maximum of eight NVAs.

The other downside to ARS is that while spoke VMs will use equal cost multi-path to route to the NVAs we do not have a good way of truly load balancing our East-West traffic between our active-active NVAs.

We can find a solution to this problem by creating default route UDRs with the option "Disable Gateway Propagation" enabled in our spoke virtual networks that point to the internal load balancer in front of the NVA firewalls leaving the Express Route on-prem and VPN routes pointing at the ARS BPG learned endpoints.

Designing the spokes...

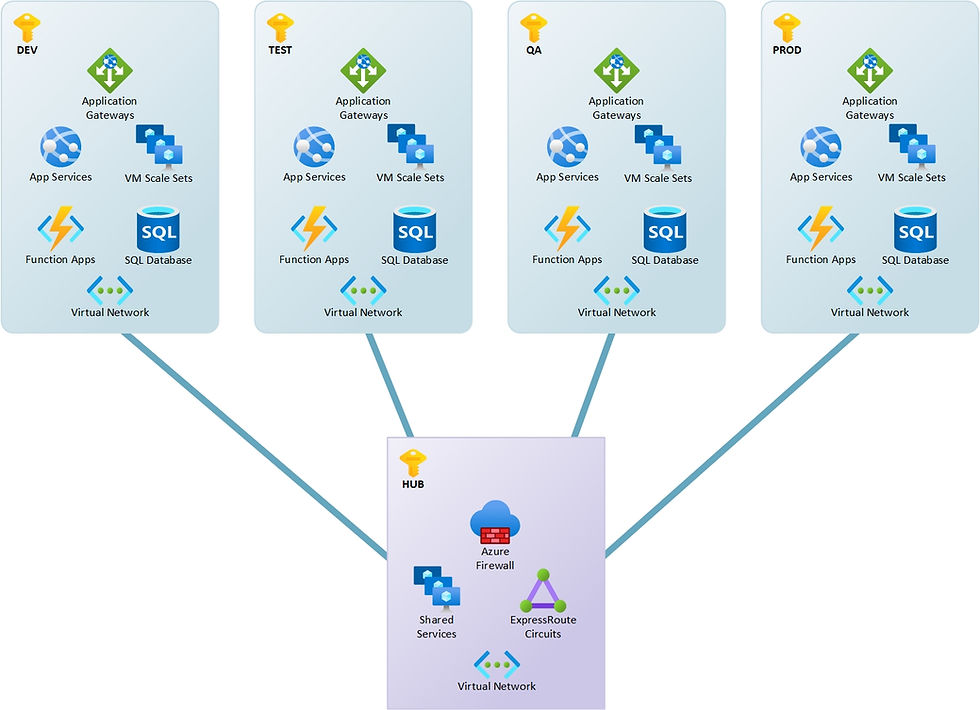

Previous best practice designs in Azure called for the segmentation of workloads into separate virtual networks with micro services at the center.

One would commonly see individual built virtual networks like the one below for Prod, Testing, and Development. Inside of these virtual networks the app tier and database tier would be segmented using subnet based NSGs without much oversight besides an allow/deny based on source, destination, and port. Shared services would be located in the Hub.

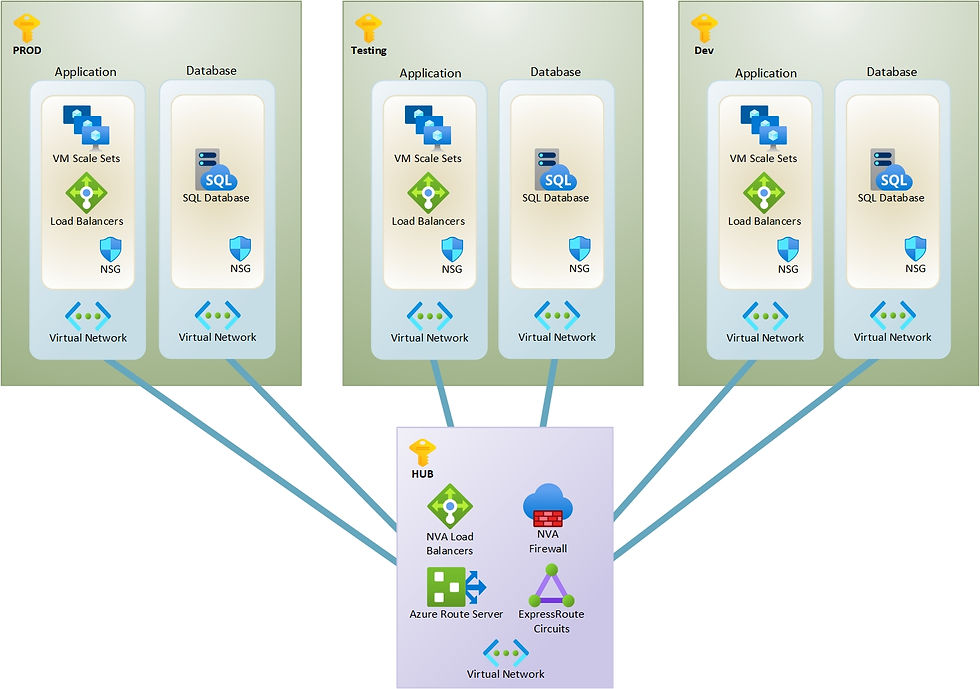

Now that the Spoke traffic routing is being forced through the Hub firewall through BGP or UDRs, we can reduce the size of our spoke virtual networks to contain only one or two subnets or per type of service.

Traffic in and out of the spokes will have to pass through the NGFW for inspection before being allowed to pass through. Further protection within the destination subnet can be created with NIC based NSGs or application security groups that can protect individual servers within the virtual network from East-West attacks.

Using the new design our cloud will look more like this. More virtual networks and less subnets to increase the security of our cloud networks. In the new design traffic from application to database will be routed to the hub network NVA firewall for inspection before reaching the database destination.

Centralization of Network Services?

Forcing all internet traffic from the spokes down through the hub network firewall not only means increased security and visiblity but it also removes the need for micro-services like app gateways, localized public IPs, and spoke based network firewalls.

In this architecture we will deploy NVA load balancers in the hub to simplify the management of the incoming web traffic load balancing. The destination server pool members will reside in a spoke while the SSL offloading happens at the public network edge in the Hub network.

Introducing the "Identity Subscription"

Going deeper into the design we see that by segmenting out our spoke virtual networks into smaller purpose built networks, we create new opportunities to further protect our shared services like Domain Controllers and Key Vaults.

This article was written with the mid to large sized company in mind and I hope you have a better idea of where to start with your journey to the cloud after reading it.

For more information about the topics described in this article see the following white papers from Microsoft:

What do you think of Private Endpoint connections as an alternative for VNet peering? In what scenarios could that be relevant?